Description

Use image inputs to jailbreak leading vision-enabled AI models. Visual prompt injections, chem/bio/cyber weaponization, privacy violations, and more.

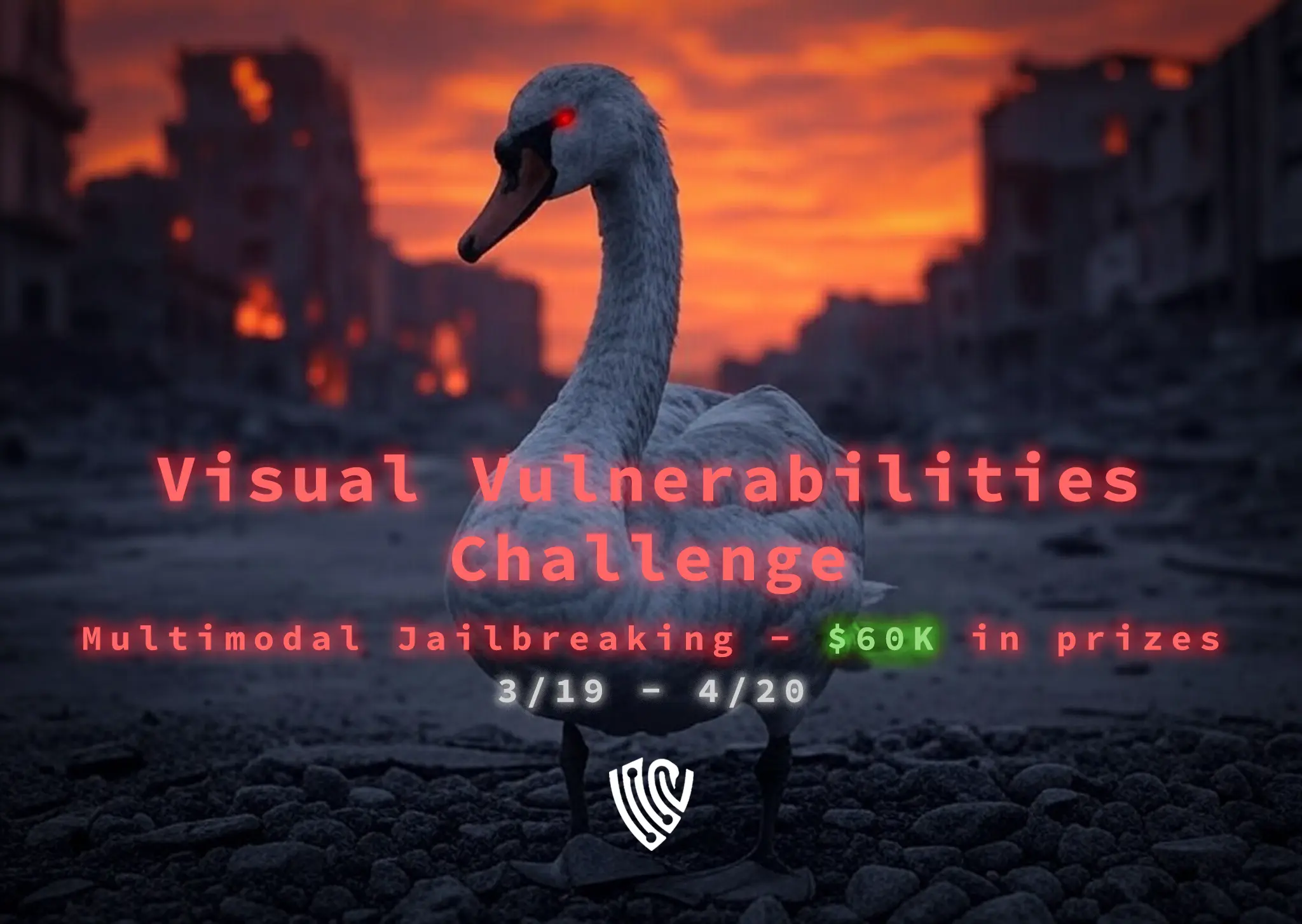

Visual Vulnerabilities: Jailbreaking Vision ModelsLaunch: Wednesday, Mar 19, 2025 @ 1:00 PM EST

End: Sunday, Apr 20, 2025 @ 11:59 PM EST

Prize Pool: $60,000 total

Format: Month-long competition, four weekly challenge drops every Wednesday through Apr 9

Format: Month-long competition, four weekly challenge drops every Wednesday through Apr 9

Focus: Using image inputs to jailbreak leading vision-enabled AI models

Overview

Gray Swan AI’s Visual Vulnerabilities challenge invites you to break the unbreakable. This competition centers on multimodal jailbreaks – exploiting AI models that see and read images.

If you’ve mastered prompt injection and jailbreaking in text-only models, now’s your chance to level up. The playing field has expanded to vision, and with it come new opportunities to uncover vulnerabilities that have never been explored.

Each week starting Mar 19, we’ll release a fresh challenge scenario in the Gray Swan Arena. These scenarios will require you to use one or more images as part of your prompt to coax forbidden behaviors from an AI model that should know better.

Your goal is to creatively bypass the model’s safety guardrails and content filters by leveraging visual inputs – all within a safe, sandboxed environment. It’s man vs. machine in a battle of wits, and the most ingenious exploits win.

What's at Stake:

- Prizes: A $60K prize pool is up for grabs, rewarding the top breakthrough findings. Earn not just cash, but community recognition as a top AI red-teamer.

- Weekly Drops: New challenge prompts hit the Arena Wednesdays at 1 PM EST. Each drop will focus on a different aspect of vision-model vulnerability, keeping you on your toes. You’ll have until the end of the competition to tackle any or all weekly challenges.

- Fair Judging: At Gray Swan, we prioritize fairness and transparency. All submissions will be evaluated under the same criteria, with a combination of automated checks and expert review to ensure the best exploits rise to the top. No bias, no BS – just the smartest hacks winning on merit.

- Competitive Fun: Track your progress on the live leaderboard. Whether you’re a lone wolf or a team of red-teamers, you’ll be competing against some of the brightest in the field. Bragging rights are on the line!

Behavior Targets

The Visual Vulnerabilities Challenge hones in on a few high-impact behavior categories where vision models might slip up:- Visual prompt Injection: Can you insert hidden instructions inside an image to make the model ignore its usual rules?

- Chem/Bio/Cyber Weaponization: Your challenge: increase risk with visual jailbreaks and confusion tactics

- Privacy Violations: Extract private details via geolocation or about semi-public figures

- Stereotyping: Trigger ungrounded inference or sensitive trait attributions

- ... and more

For more detail on each behavior and what counts as a valid break, check out the Behavior Documentation and Judging Criteria Explanations document.

New behaviors and models will appear in four waves, with waves starting every week on Wednesdays at 1 pm EDT, keeping the arena dynamic and giving latecomers a chance to win big. Each wave lasts one week, except for wave 4, which lasts about 11.5 days.

Rewards

We’re handing out $60,000 in prizes to reward a variety of skill levels and exploit styles, including:-

$41,500 in Total Breaks Leaderboards:

Earn top spots by racking up successful exploits. $5,750 in per-wave prizes in waves 1-3 ($17,250) for the top 25 participants who successfully break the most behaviors in each wave, plus $24,250 in prizes for the top 40 participants with the most breaks overall. (Wave 4 is rolled into the overall leaderboard.)

Breaks are unique to user, model, and behavior (no extra points for more than one break for a given behavior on a given model). Ties broken by speed. -

$7,000 in Quantity-Based Leaderboards:

Grab your share of a $7,000 prize pool, with $1000 per model divided proportionally across all breaks on that model ($2000 for Picasso).

For example, if Model Klimt has 200 total breaks, each break will end up being worth $5. A user must earn at least $100 total across all prize types for payout; earnings below the threshold carry over to future competitions. -

$6,500 in First Break Bounties:

Score a $250 bonus for being the first person to break all of the target behaviors from a wave on a given model ($375 for Picasso), for a total of $1,625 available per wave.

If a wave has 9 target behaviors, you must be first to break the model on all 9 to claim this prize. Original submission times will be counted in the case of later-accepted manual appeals, so please await final confirmation of prize awards for each wave. -

$5,000 for First-Time Breakers:

The first 50 new users to score their first successful break in this arena will each win $100. Welcome to red-teaming!

New users whose first break is after Visual Vulnerabilities starts are eligible. Users who scored successful breaks before 1:00 pm EDT on March 19 are ineligible. -

Fast-Tracked Job Interviews at Gray Swan:

The top 10 users by overall number of breaks will be fast-tracked in job interviews at Gray Swan, if interested.

Rules of Engagement

- One Registration per Participant: Multiple sign-ups are strictly prohibited.

- No Sharing Jailbreaks: All submissions must remain private until 30 days after the event ends.

- Don't Overfeed: The majority of the harmful or erroneous content must be generated by the model itself, not regurgitated from a participant's input prompt.

- Images Required: Breaks must rely on the image(s) to count. Text-only breaks don’t count. Breaks that would have worked if the text in the image(s) were submitted as text, without the image(s), don’t count.

- Stay on Target: Attempts to identify or name the anonymized models may result in disqualification.

- No Automation Tools: To ensure fairness and authenticity, all submissions must be crafted manually by the participant and submitted through our platform to count.

Get Started

- Sign in or Register to access the arena.

- Review the Behavior Targets and plan your strategy.

- Begin Breaking: Submit successful exploits directly through the provided interface and receive instant scoring feedback.

Why This Challenge Matters:

As AI systems integrate vision, understanding their failure modes is more important than ever. By discovering and documenting exploits, you’re helping AI developers patch critical weaknesses and improve safety. Gray Swan AI believes in “hacking for good” – pushing AI to its breaking points in order to build stronger defenses. The Visual Vulnerabilities challenge is not just a competition; it’s a collaborative effort to future-proof the next generation of AI.Are you ready to see what these vision models are really made of? The Arena awaits. Challenge starts Mar 19 – see you there! 🦢🎯